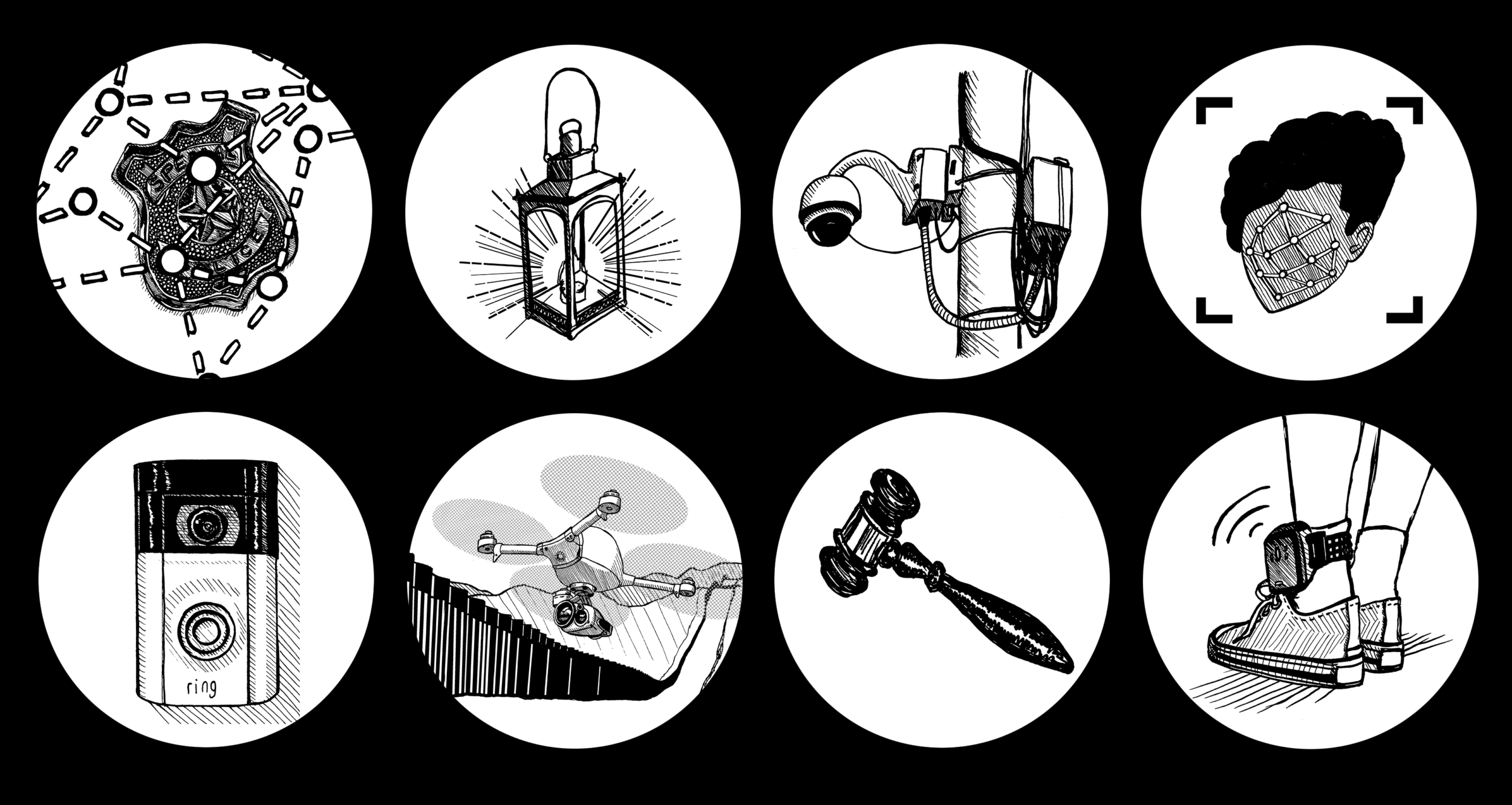

Today, incarcerated people in some facilities are being forced to wear hard plastic bracelets that transmit their live biometric data to the state. At the same time, algorithm-driven technologies are being used to predict who might commit a crime, to detain immigrants and identify sites for ICE raids, and to jail Black people misidentified by facial recognition systems.

It has become increasingly clear that we need a term for the insidious ways artificial intelligence is being used to criminalize society’s most vulnerable, while surveilling the rest of us on a daily basis—and generating billions of dollars in the process. Carceral AI, a term coined by researcher Dasha Pruss and a team of interdisciplinary scholars, captures this phenomenon. Pruss’s research serves as both the inspiration for, and first installment in, our own Carceral AI series, which will address an array of topics ranging from AI-driven facial (mis)recognition to efforts to use AI to justify the revival of so-called “predictive” policing.

The series will continue through 2026. To make sure you never miss an installment, sign up for our weekly newsletter.

Art: Dasha Pruss